Two Papers from the Lab Accepted at NeurIPS 2023

Font:【B】 【M】 【S】

NeurIPS, or the Conference on Neural Information Processing Systems, is a top-tier international conference in the fields of machine learning and computational neuroscience. This article introduces the two papers from the Laboratory of Brain Atlas and Brain-Inspired Intelligence, Institute of Automation, Chinese Academy of Sciences, accepted at NeurIPS 2023.

1. Spike-driven Transformer

Authors: Yao Man, Hu Jiakui, Zhou Zhaokun, Yuan Li, Tian Yonghong, Xu Bo, Li Guoqi

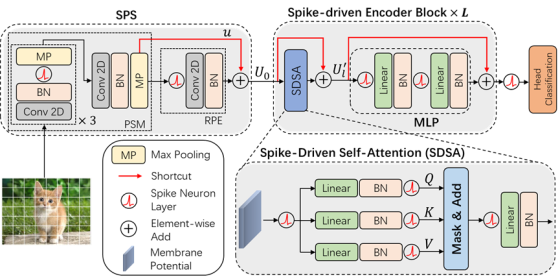

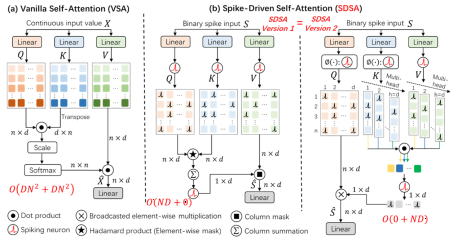

This paper introduces the first Spike-driven Transformer, in which the entire network uses only sparse addition operations. The proposed Spike-driven Transformer has four unique properties: (1) event-driven computation that does not activate when the input is zero; (2) binary spike communication, where all matrix multiplications involving spike matrices are converted to sparse addition; (3) a self-attention mechanism designed to have linear complexity in both token and channel dimensions; and (4) computation between spike-form Query, Key, and Value matrices is performed using masks and addition. In summary, the entire network requires only sparse addition operations. To achieve this, the paper designs a novel Spike-Driven Self-Attention (SDSA) operator that uses only masks and additions without any multiplications, achieving 87.2 times lower energy consumption than the original self-attention operator. Additionally, the network reorganizes all residual connections to ensure all inter-neuron signals are binary spikes. Experimental results show that the Spike-driven Transformer achieves 77.1% top-1 accuracy on ImageNet-1K, the best result to date in the SNN field.

Figure 1. Spike-driven Transformer architecture diagram

Figure 2. Spike-driven Self-attention operator

Paper link:

https://arxiv.org/abs/2307.01694

Code link:

https://github.com/BICLab/Spike-Driven-Transformer

2. Bullying10K: A Large-Scale Neuromorphic Dataset towards Privacy-Preserving Bullying Recognition

Authors: Dong Yiting, Li Yang, Zhao Dongcheng, Shen Guobin, Zeng Yi

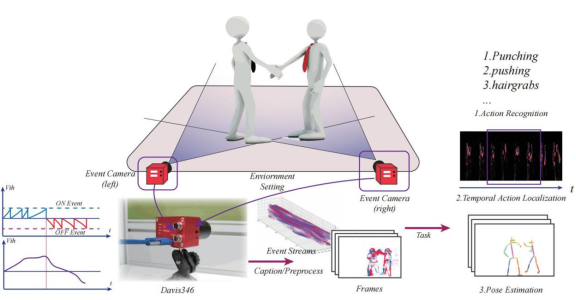

Violent behavior is common in daily life and poses significant threats to individuals’ physical and mental health. While surveillance cameras in public spaces have been shown effective for actively deterring and preventing such incidents, their widespread deployment raises concerns about privacy infringement. To address this, we use dynamic vision sensor (DVS) cameras to detect violent events while preserving privacy, as they capture changes in pixel brightness instead of static images. We developed the Bullying10K dataset, which includes various actions from real-life scenarios, complex motion, and occlusions. It provides three benchmarks for evaluation: action recognition, temporal action localization, and pose estimation. Bullying10K balances violence detection with personal privacy protection by offering 10,000 event clips totaling 12 billion events and 255 GB of data. It also poses new challenges for neuromorphic datasets and serves as a valuable resource for training and developing privacy-preserving video systems. Bullying10K opens new possibilities for innovative methods in these fields.

Related links:

https://figshare.com/articles/dataset/Bullying10k/19160663

https://www.brain-cog.network/dataset/Bullying10k/

Copyright Institute of Automation Chinese Academy of Sciences All Rights Reserved

Address: 95 Zhongguancun East Road, 100190, BEIJING, CHINA

Email:brain-ai@ia.ac.cn