Random Heterogeneous Spiking Neural Networks Enhance Security Capabilities: Improving Adversarial Robustness via Brain-Inspired Information Processing Mechanisms

Font:【B】 【M】 【S】

On June 3,2025,the Brain-Inspired Cognitive AI Group at the Laboratory of Brain Atlas and Brain-Inspired Intelligence,Institute of Automation,Chinese Academy of Sciences,together with the Beijing Key Laboratory for AI Security and Super Alignment,the Beijing Institute for Advanced AI Security and Governance,and the Center for Long-Term Intelligence Research,published a new paper titled Random Heterogeneous Spiking Neural Network for Adversarial Defense in iScience,a journal under Cell Press.

By introducing random mechanisms and neuronal heterogeneity into spiking neural networks (SNNs),the team enabled the network to generate diverse spiking patterns across different trials. Rigorous testing against various adversarial attacks demonstrated that the proposed method effectively improves the network’s adversarial defense capability while incurring minimal loss in accuracy.

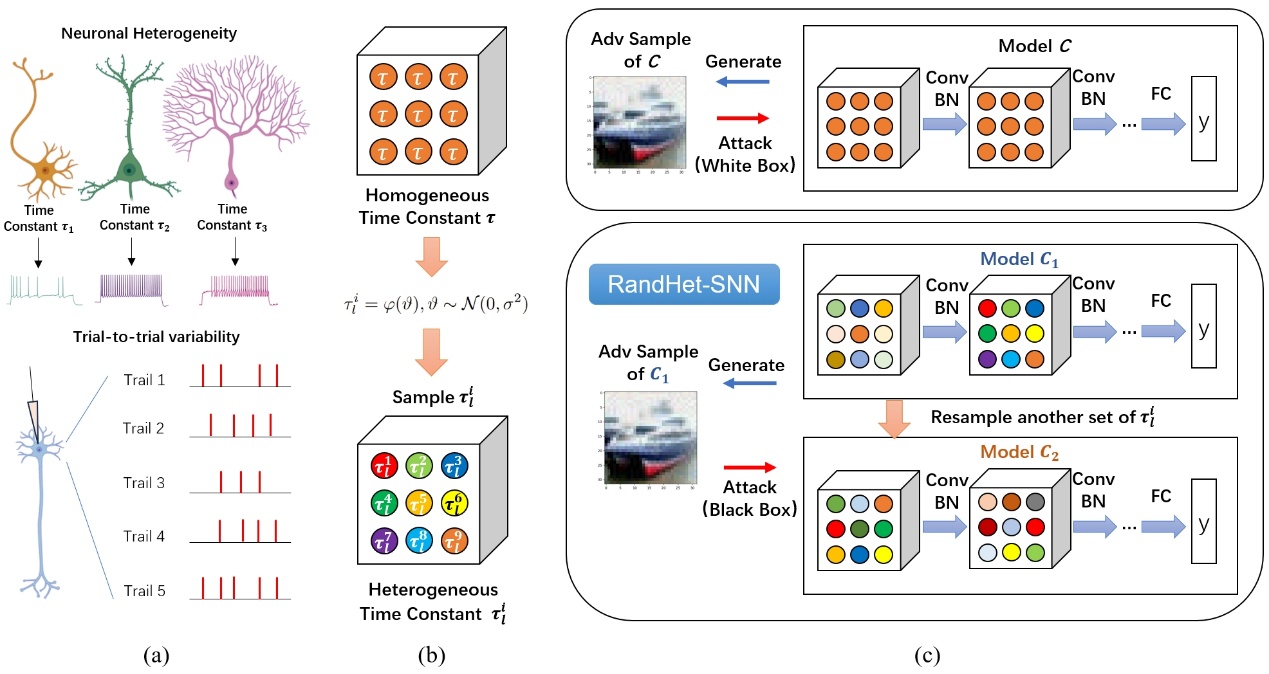

Brain-inspired information processing: This study introduces neuronal heterogeneity and trial-to-trial variability of spiking activity into SNNs,building a Random Heterogeneous Spiking Neural Network (RandHet-SNN).

Reduced performance loss: By randomizing neuron time constants,the method significantly improves adversarial robustness while reducing model performance loss.

Stronger defense capability: RandHet-SNN's adversarial robustness surpasses randomization methods used in artificial neural networks (ANNs).

Adversarial robustness is a critical component in developing trustworthy AI systems. SNNs’vulnerability to adversarial attacks undermines their reliability in safety-critical applications such as autonomous driving,highlighting key differences between SNN-based visual processing and the human visual system.

Introducing randomness is an effective approach to enhance the adversarial robustness of neural networks. Biological visual cortices exhibit significant intrinsic randomness in neural activity, suggesting a potential link between random information processing and robustness. For the same stimulus, neuronal activity in the brain varies due to inherent stochasticity, enabling the system to filter environmental noise and focus on salient information. Additionally, biological neural networks show pronounced electrophysiological heterogeneity, with multiple neuron types contributing to system-level redundancy and fault tolerance. Prior studies have demonstrated that this diversity of neuronal parameters can effectively enhance network robustness.

Existing work has mainly introduced random parameters in ANN weights,biases,or normalization layers,but rarely inside the activation functions themselves. This approach fails to capture the true heterogeneity of biological neurons. To address this limitation,the present study proposes RandHet-SNN,which randomizes neuron time constants within the SNN. This introduces both neuronal heterogeneity and stochasticity into the model’s responses.

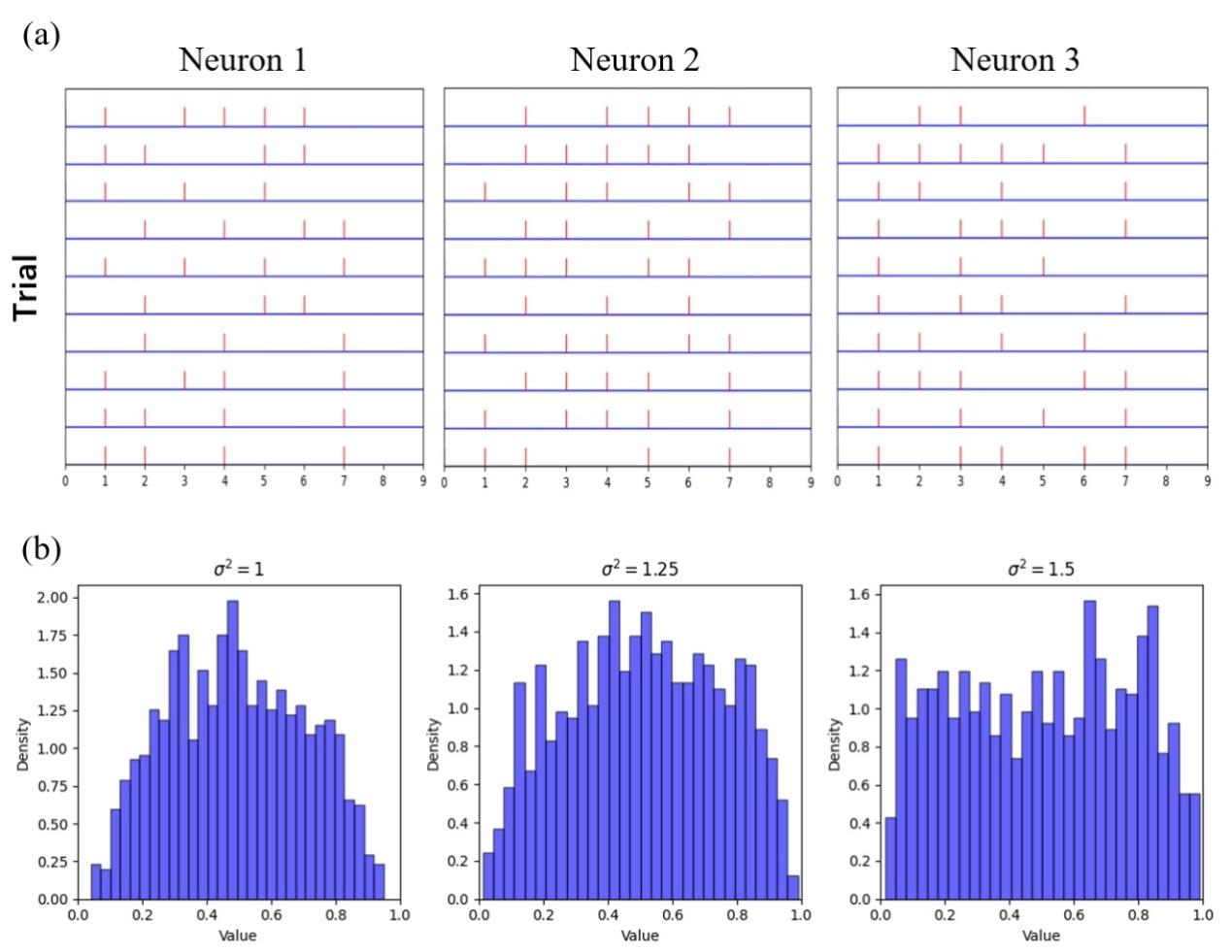

In RandHet-SNN,each neuron in every layer is assigned an independent random variable as its time constant. During forward propagation,these time constants are sampled from predefined distributions,giving the entire network heterogeneous and stochastic properties. The study defines each neuron’s time constant as an independent random variable,with two sampling strategies:

The first samples the time constant independently at every time step.

The second samples it once at the start of forward propagation and holds it fixed for all subsequent time steps.

By default,RandHet-SNN uses the first sampling method,while models using the second method are denoted as RandHet-SNN**. In each forward pass,independent sampling ensures neurons exhibit differentiated dynamics across trials,effectively enhancing network randomness and heterogeneity. The random mechanism in RandHet-SNN disrupts attackers’ability to exploit deterministic parameters,effectively turning white-box attack scenarios into black-box ones.

Figure 1. Schematic of RandHet-SNN operating principle.

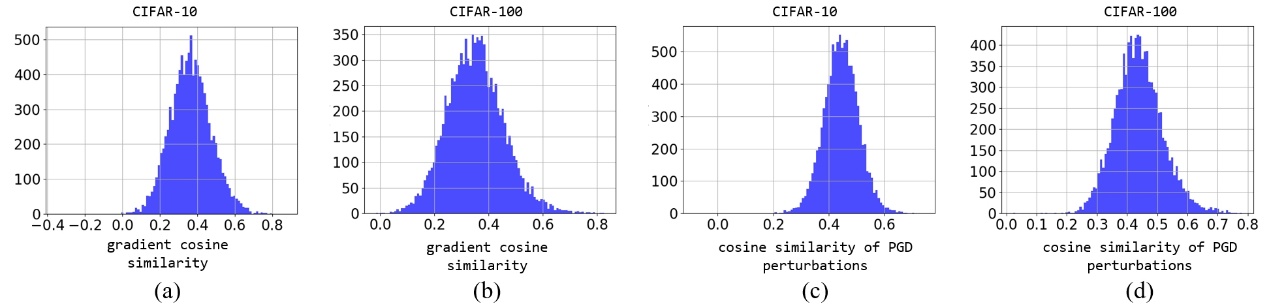

RandHet-SNN’s randomness causes gradients for the same input to vary across trials. Different time constant samples lead to different gradients,and this study analyzed those variations via cosine similarity. After training RandHet-SNN on CIFAR-10 and CIFAR-100 datasets,the team visualized the distribution of gradient cosine similarities,which clustered around 0.3. This indicates that adversarial attack transferability between models under different time constant samples is relatively low.

Furthermore,the study evaluated the cosine similarity between generated adversarial perturbations. The results showed these perturbations mainly distributed between 0.4 and 0.5,indicating significant differences induced by RandHet-SNN’s stochastic mechanisms. Such differences effectively enhance adversarial robustness: attacks tailored to one sampling outcome struggle to transfer to others.

Figure 2. Gradient and adversarial perturbation cosine similarity analysis.

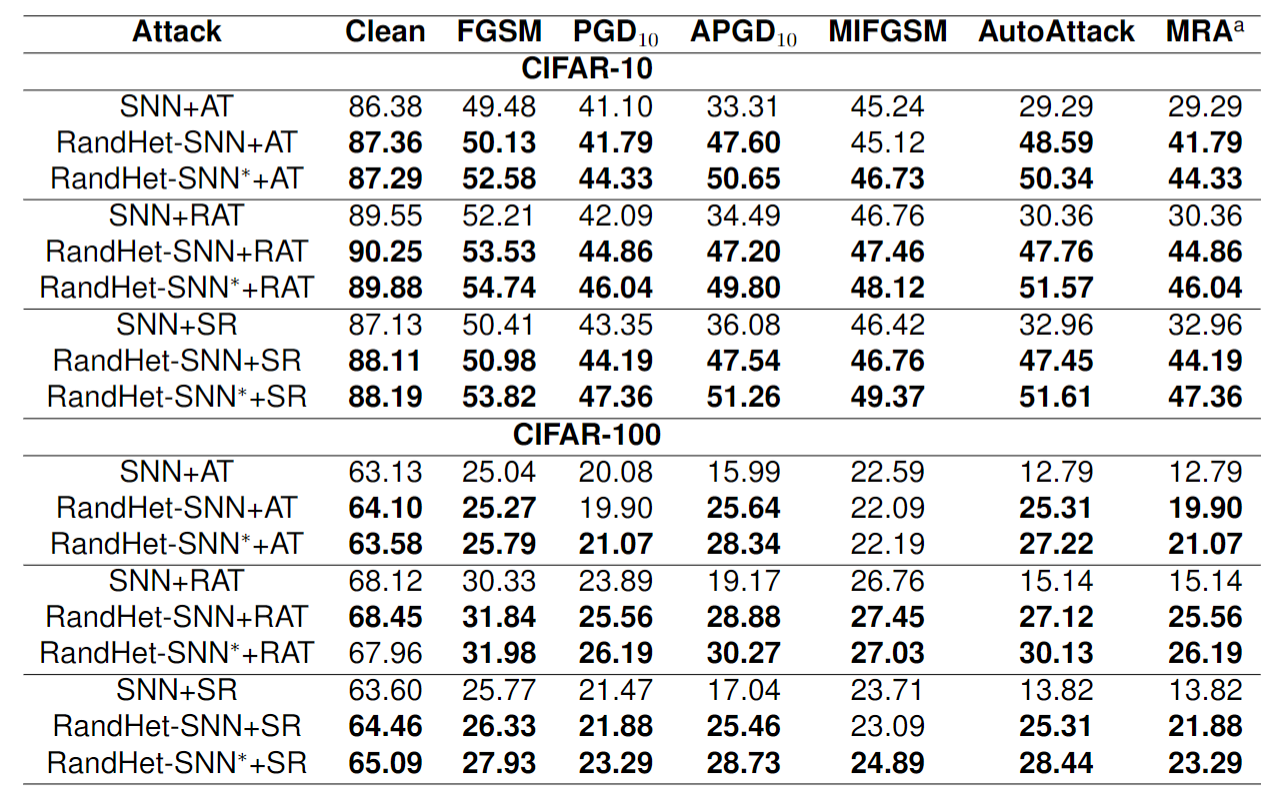

The study validated RandHet-SNN’s adversarial robustness against multiple attack methods. Results showed both RandHet-SNN and RandHet-SNN* combined effectively with various adversarial training techniques,substantially improving robustness across different attack types. Crucially,RandHet-SNN achieved this with minimal loss to clean accuracy.

Figure 3. Performance diagram of RandHet-SNN.

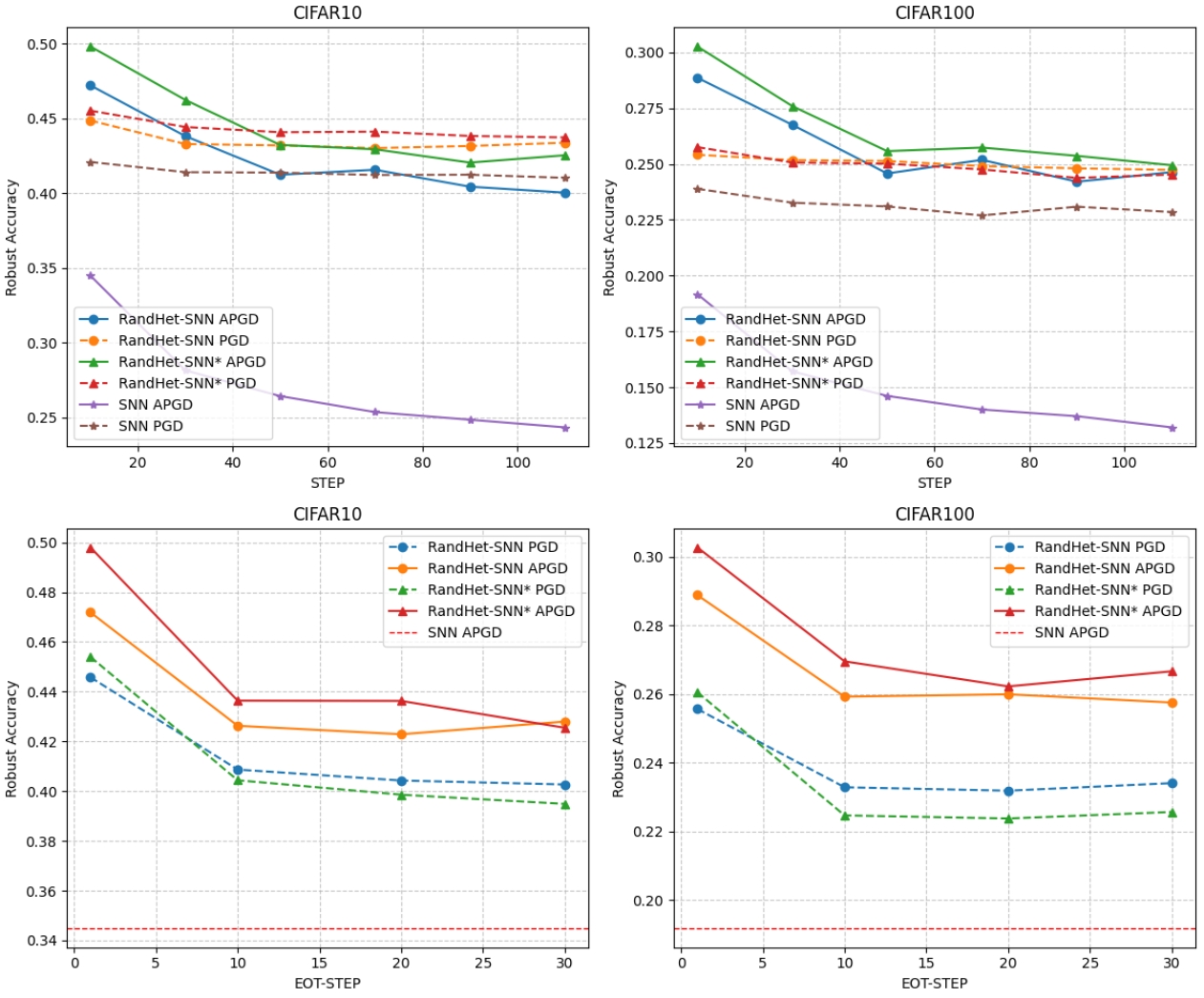

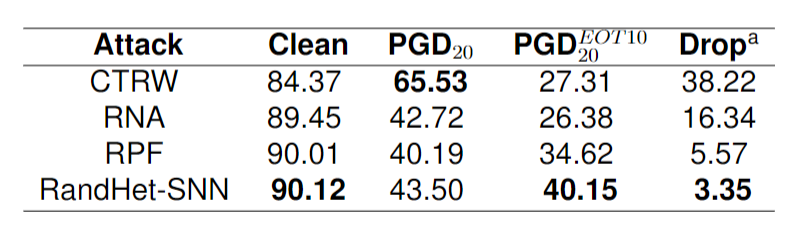

To rigorously evaluate adversarial robustness,the study carefully addressed gradient obfuscation when generating white-box adversarial samples. It used the Expectation Over Transformation (EOT) method during each attack iteration to obtain more precise gradient estimates. Results indicated that as EOT steps exceeded 10,RandHet-SNN’s robust accuracy stabilized and consistently outperformed the SNN baseline.

Additionally,randomization methods used in ANNs showed significantly decreased robust accuracy under EOT attacks,while RandHet-SNN maintained higher clean and robust accuracy with less performance degradation. These findings demonstrate RandHet-SNN as a more reliable approach to randomization for adversarial defense.

Figure 4. RandHet-SNN performance under EOT attack.

Figure 5. Comparison of RandHet-SNN with ANN randomization methods.

PhD student Jihang Wang (first author) said:

“This research introduces both randomness and heterogeneity into SNNs via randomized time constants. Different sampling in each trial leads to trial-to-trial variability in spiking patterns. By tuning sampling variance,RandHet-SNN controls the heterogeneity of time constant distributions. Our rigorous EOT-based evaluation confirms RandHet-SNN’s ability to enhance adversarial defense capabilities.”

Figure 6. Illustration of trial-to-trial variability in spiking patterns and time constant distribution under different variances.

Corresponding author Dr. Yi Zeng commented:

“Adversarial robustness is a critical security capability for AI models. This work integrates advances in brain-inspired AI and AI safety by proposing a method that enhances SNN robustness via time constant randomization. RandHet-SNN has been integrated into our brain-inspired cognitive intelligence engine,'BrainCog,'representing another key contribution to its security and robustness capabilities.

RandHet-SNN simultaneously improves clean and robust accuracy across various adversarial attack scenarios. Its low sensitivity to variance changes demonstrates stability under hyperparameter fluctuations,highlighting strong anti-interference and environmental adaptability. By deeply integrating the stochasticity and heterogeneity of biological neural information processing,this study advances the adversarial robustness of fully spiking neural network AI systems.

Future work will explore theoretical foundations for enhancing adversarial defenses in brain-inspired models,deepening our understanding of the relationship between stochastic mechanisms in brain information processing and robustness,and guiding the development of safer cutting-edge AI models.”

Random Heterogeneous Spiking Neural Network for Adversarial Defense

https://www.cell.com/iscience/fulltext/S2589-0042(25)00921-6

https://github.com/BrainCog-X/Brain-Cog/tree/main/examples/Snn_safety/RandHet-SNN

Jihang Wang

PhD student (2021 cohort) in the Brain-Like Cognitive Intelligence Research Group at the Institute of Automation,Chinese Academy of Sciences,advised by Dr. Yi Zeng. His research focuses on adversarial robustness in brain-inspired spiking neural networks,with publications in iScience and NeurIPS.

Dongcheng Zhao

Senior Researcher at the Beijing Institute for Advanced AI Security and Governance, Director of the AI Security Center. His research covers brain-inspired intelligence, AI safety and alignment, and AI ethics and governance. Publications include PNAS, Patterns (cover article), iScience, Scientific Data (Nature Publishing Group), IEEE Transactions series, and top AI conferences such as ICLR, NeurIPS, CVPR, IJCAI, and AAAI. Twice awarded Cell Press’s “China Paper of the Year” and leads multiple national research projects and industry collaborations.

Chengcheng Du

PhD student (2023 cohort) in the Brain-Like Cognitive Intelligence Research Group at the Institute of Automation,Chinese Academy of Sciences,advised by Dr. Yi Zeng. Research interests include modeling working memory across species and reinforcement learning applications. Has published in Frontiers,iScience,and other journals.

Xiang He

PhD student (2023 cohort) in the Brain-Like Cognitive Intelligence Research Group at the Institute of Automation,Chinese Academy of Sciences. Research focuses on brain-inspired methods and multimodal learning in spiking neural networks,with publications in NeurIPS,AAAI,ACM MM,among others.

Qian Zhang

Associate Researcher in the Brain-Like Cognitive Intelligence Research Group at the Institute of Automation,Chinese Academy of Sciences. Specializes in brain-inspired cognitive modeling,especially working memory modeling and simulation of brain rhythms at different consciousness levels. Published in Computers in Biology and Medicine,IEEE TVLSI,Patterns,Information Sciences,and more.

Yi Zeng

Professor at the Institute of Automation,Chinese Academy of Sciences;Director of the Beijing Key Laboratory for AI Security and Super Alignment;President of the Beijing Institute for Advanced AI Security and Governance;Professor and PhD supervisor at the University of Chinese Academy of Sciences;Chair of the CAAI Committee on Cognitive Computing;Member of China’s National New Generation AI Governance Expert Committee;Expert on the UN High-Level Advisory Body on AI;and UNESCO AI Ethics Expert Group member.

Research interests include brain-inspired AI,AI ethics,safety and governance,and AI for sustainable development. Named one of TIME magazine’s 100 most influential people in AI. Publications include PNAS,Patterns,iScience,Scientific Data,Scientific Reports (Nature Publishing Group),Science Advances (AAAS),and major AI journals and conferences such as IEEE TPAMI,TEVC,TVLSI,TCAD,TCDS,TAI,Neural Networks,NeurIPS,CVPR,IJCAI,and AAAI.

Copyright Institute of Automation Chinese Academy of Sciences All Rights Reserved

Address: 95 Zhongguancun East Road, 100190, BEIJING, CHINA

Email:brain-ai@ia.ac.cn