Laboratory of Brain Atlas and Brain-Inspired Intelligence at CASIA and Peking University Collaboratively Release Open-Source Deep Spiking Neural Network Framework SpikingJelly

Font:【B】 【M】 【S】

Spiking Neural Networks (SNNs), known as the third generation of neural networks, abstract the biological nervous system at a lower level. They are not only fundamental tools in neuroscience for studying brain principles but also attract significant attention in computing for their sparse computation, event-driven operation, and ultra-low-power characteristics. With the introduction of deep learning methods, SNN performance has improved dramatically, making spiking deep learning an emerging research hotspot. Traditional SNN frameworks have focused more on biological interpretability, aiming to simulate fine-grained spiking neurons and real biological systems. However, they typically lack automatic differentiation, cannot fully leverage GPU parallel computing, and offer poor support for neuromorphic sensors and computing chips.

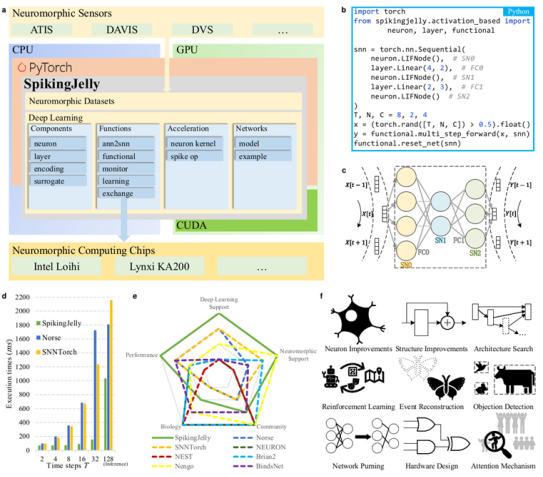

To address these limitations, Prof. Li Guoqi's team from the Laboratory of Brain Atlas and Brain-inspired Intelligence at the Institute of Automation, Chinese Academy of Sciences, in collaboration with Prof. Tian Yonghong's team from the School of Computer Science, Peking University, developed and open-sourced the deep learning framework for spiking neural networks—SpikingJelly. SpikingJelly provides an end-to-end spiking deep learning solution, supporting neuromorphic data processing, deep SNN construction, surrogate gradient training, ANN-to-SNN conversion, weight quantization, and deployment on neuromorphic chips.

SpikingJelly offers several major advantages.

First,it is simple and user-friendly. Spiking deep learning sits at the intersection of computational neuroscience and deep learning,requiring researchers to master knowledge from both fields. In practice,many are only experts in one. SpikingJelly provides an intuitive PyTorch-style API,bilingual (Chinese/English) tutorials,and an active,friendly discussion community. It also includes common network models and training scripts,enabling researchers to quickly cross-train and deploy deep SNNs with just a few lines of code.

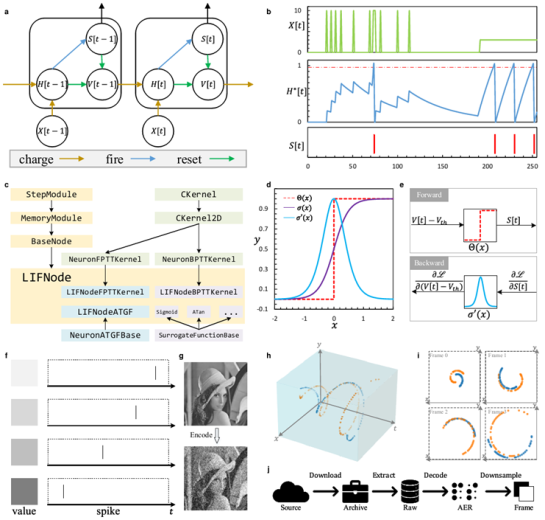

Second,it is highly extensible. Research paradigms that either mimic real biological neural systems or borrow from mature ANN experience are widely used and have produced many results. Researchers want to freely define and extend new models with minimal code changes,a philosophy that aligns perfectly with SpikingJelly’s design. Most modules in SpikingJelly are implemented with clear hierarchical inheritance,reducing development cost while offering ideal templates for defining new models.

Third, it delivers exceptional performance. Deep learning inherently involves large-scale data processing and model training, and spiking deep learning is no exception. SNNs’ extra temporal dimension increases computational complexity, raising demands on resources. Given that datasets with millions of samples like ImageNet are now standard for SNNs, training speed is increasingly important. SpikingJelly fully exploits SNN properties using techniques such as optimized computational graph traversal, just-in-time (JIT) compilation, and semi-automated CUDA code generation to accelerate simulation, achieving up to 11× training speed improvements over other frameworks. Independent benchmarking by the Open Neuromorphic community tested multiple SNN frameworks, including those from Intel, SynSense, UCSC, Heidelberg University, and KTH. Results showed SpikingJelly delivered over 10× faster simulation speeds than alternatives.

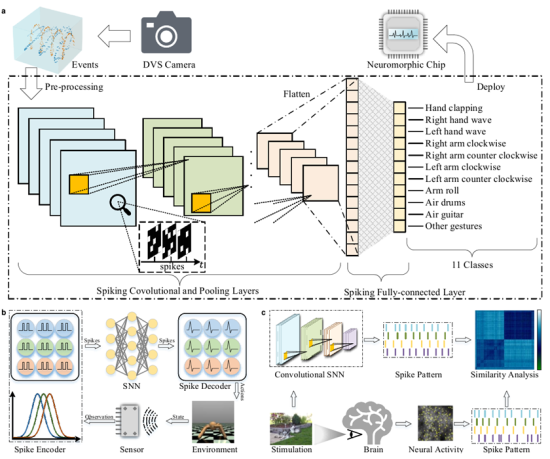

Since its debut in winter 2019, SpikingJelly has been widely adopted by researchers. Work based on SpikingJelly has been extensively published, expanding SNN applications from simple MNIST classification to human-level ImageNet classification, network deployment, event camera data processing, and more. Cutting-edge research fields have also used it, including calibratable neuromorphic perception systems, neuromorphic memristors, and event-driven accelerator hardware design. Over 95 published papers have used SpikingJelly, with 25 in CCF-A top-tier AI conferences, 5 in IEEE Transactions, and 1 in Nature Communications. These applications and studies show that SpikingJelly’s open-sourcing has greatly advanced spiking deep learning.

Figure 1. SpikingJelly paper published in Science Advances

Figure 2. Structure,example code,simulation speed,ecosystem,and typical applications of the SpikingJelly framework

Figure 3. Typical modules in the SpikingJelly framework

Figure 4. Typical applications of the SpikingJelly framework

Paper link:

https://www.science.org/doi/full/10.1126/sciadv.adi1480

SpikingJelly framework links:

GitHub: https://github.com/fangwei123456/spikingjelly

OpenI Community: https://openi.pcl.ac.cn/OpenI/spikingjelly

Copyright Institute of Automation Chinese Academy of Sciences All Rights Reserved

Address: 95 Zhongguancun East Road, 100190, BEIJING, CHINA

Email:brain-ai@ia.ac.cn