Brain-Inspired Cognitive AI Research Group Reveals Significant Cognitive Gap Between AI and Humans

Font:【B】 【M】 【S】

Recently, the Brain-Inspired Cognitive AI, led by Researcher Yi Zeng, drew inspiration from the illusory contour phenomenon widely observed in human and animal visual systems. The team proposed a method to convert standard machine-learning vision datasets into illusory contour samples, enabling the quantitative measurement of current deep learning models’ ability to recognize illusory contours. Experimental results showed that both classical and state-of-the-art deep neural networks struggle to recognize these contours in the human-like way. The study was published in Cell Press’s journal Patterns.

Over the past decade, neural networks and deep learning models have appeared to achieve remarkable success, even surpassing human performance on specific aspects of many visual tasks. However, their performance often degrades under various image distortions and corruptions. An extreme example is adversarial attacks, where tiny, imperceptible perturbations can cause complete failure of a neural network, whereas the human visual system remains highly robust to such distortions. This highlights fundamental differences between deep learning and biological vision systems.

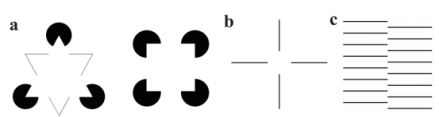

Illusory contours are a classic phenomenon in cognitive psychology where the visual system perceives clear boundaries even in the absence of color contrast or luminance gradients (Figure 1). This ability is widespread across humans and many animal species—including mammals, birds, and insects. Its universal presence in independently evolved visual systems suggests it is a fundamental and crucial aspect of biological vision processing, and therefore a capability that artificial vision systems should also possess. The abutting grating illusion is a classic form of illusory contour phenomenon, where offset gratings induce false edges and shapes without luminance contrast. This study focused on testing deep learning models’ ability to perceive abutting grating illusions. Despite its common use in physiological studies to probe biological processing of illusory contours, research on deep learning models’ robustness to such illusions is rare. Evaluating this is also more complex than typical image corruption robustness, mainly because illusory contour stimuli are limited in number, often manually designed in psychology literature, and thus do not form a large-scale, machine-learning-style test set.

Figure 1. Classic illusory contour images in psychology. a. Kanizsa triangle and square; b. Ehrenstein illusion; c. Abutting grating illusion.

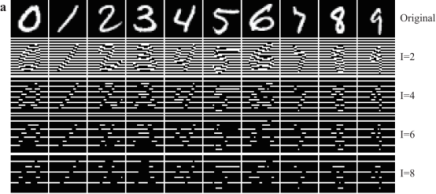

Yi Zeng’s group proposed a method called Abutting Grating Distortion as a systematic way to generate large-scale illusory contour images from silhouette images without internal texture information. The team applied this method to the MNIST handwritten digits dataset and to silhouette images of objects (16-class ImageNet silhouettes), interpolating images to enhance clarity and create stronger illusory effects for human perception. Examples are shown in Figure 2. These test images allow direct testing of deep learning models trained on MNIST or ImageNet without needing to retrain the models. Because different parameter settings produce illusions of varying strengths, the study also conducted human experiments to examine how different distortions affect human perception of illusory contours.

Figure 2. Examples generated with the Abutting Grating Distortion method.

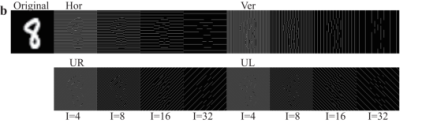

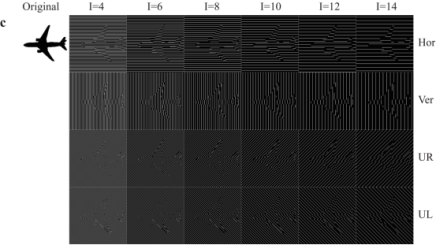

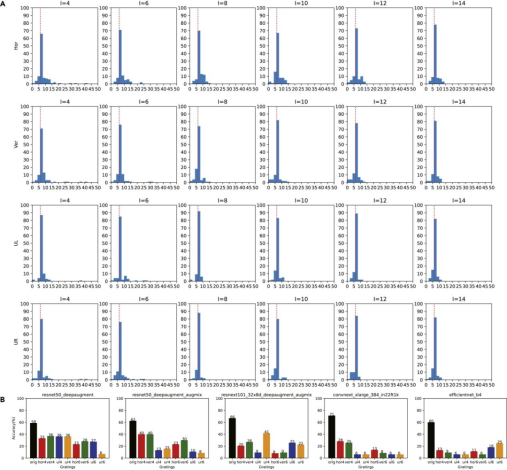

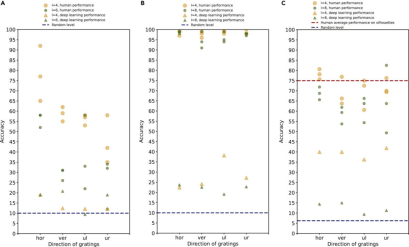

For deep learning models, the study trained fully connected and convolutional networks on MNIST, and AlexNet, VGG11 (BN), ResNet18, and DenseNet121 on high-resolution MNIST. For natural object silhouettes, 109 publicly available pre-trained models were evaluated, covering TorchVision and timm ImageNet pre-trained models from classic AlexNet, VGGNet, and ResNet to newer ViT and ConvNeXt, as well as augmentation-heavy models like CutMix, AugMix, and DeepAugment. Results on the abutting grating test sets (Figure 3) showed that although these models achieve extremely high accuracy on the original test sets, their performance on the illusory contour test sets collapsed to near random (around 10% accuracy). Figure 4 shows accuracy on the abutting grating test set for pre-trained models, revealing that most models performed at chance level, though some variation appeared depending on grating spacing. Notably, models trained with DeepAugment exhibited significantly improved robustness to the distorted dataset.

The study also recruited 24 human participants to evaluate how different parameter settings affected human illusory contour perception and digit/object recognition (Figure 5). Results showed that even the most advanced deep learning models lagged far behind human performance on the abutting grating illusion.

Figure 3. MNIST and high-resolution MNIST test results.

Figure 4. Pre-trained model test results.

Figure 5. Comparison of human experiment results and deep learning test results.

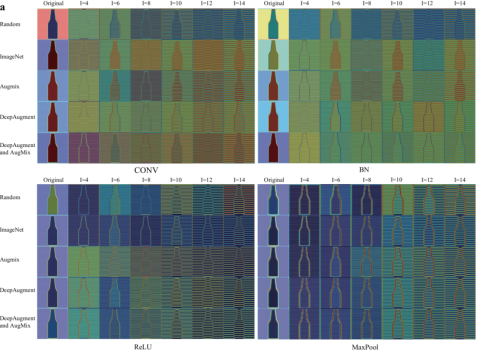

The researchers visualized shallow-layer neuron activity in models trained with and without DeepAugment (Figure 6). While both types of models showed activation along illusory contours in shallow layers, only DeepAugment-trained models exhibited endstopped neuron behavior. Endstopped neurons, first described by Hubel and Wiesel, are thought to support early representations of illusory contours and are used in computational models of this phenomenon. They respond maximally to line endpoints or corners within their receptive fields but reduce activation for extended lines. Beyond illusory contours, endstopped neurons also model other perception phenomena like motion detection, curvature detection, and small-target detection in insects. In the DeepAugment-trained models, certain convolutional kernels showed spatial arrangements resembling the predicted topology of endstopped neurons (Figure 7).

In summary, all deep neural network models studied, regardless of training method, exhibited shallow-layer activation along illusory contours in maxpool layers. However, this activation did not translate into successful behavioral-level recognition of illusory contours. Only DeepAugment-trained models displayed the endstopping property associated with improved illusory contour perception. This suggests that future work should focus on understanding the relationship between endstopped neuron properties and illusory contour recognition.

Figure 6. Shallow-layer visualizations in ResNet50.

Figure 7. Predicted topology of endstopped neuron-like phenomena.

First author Jinyu Fan commented that this research bridges cognitive science and AI by transforming standard vision datasets into abutting grating illusion images, enabling the first large-scale quantitative assessment of pretrained models’ illusory contour perception, and analyzing both neuronal dynamics and behavioral performance.

Corresponding author Yi Zeng noted: “We believe the greatest value of this study is in testing and partially re-examining seemingly successful artificial neural network models from a cognitive science perspective, demonstrating the significant gap that remains between artificial neural networks and human visual processing—and this is only the tip of the iceberg. The workings of the brain and the nature of intelligence will continue to inspire AI research. For truly fundamental breakthroughs, AI must draw on insights from natural evolution and the brain and mind to build an intelligent theoretical framework. Only then will AI have a sustainable future.”

Paper title: Challenging Deep Learning Models with Image Distortion based on the Abutting Grating Illusion

Paper link: https://www.cell.com/patterns/fulltext/S2666-3899(23)00026-0

Code repository: https://github.com/Brain-Cog-Lab/AbuttingGratingIllusion

Copyright Institute of Automation Chinese Academy of Sciences All Rights Reserved

Address: 95 Zhongguancun East Road, 100190, BEIJING, CHINA

Email:brain-ai@ia.ac.cn