Brain-Inspired Cognitive AI Group Develops Spiking Neural Network Accelerator

Font:【B】 【M】 【S】

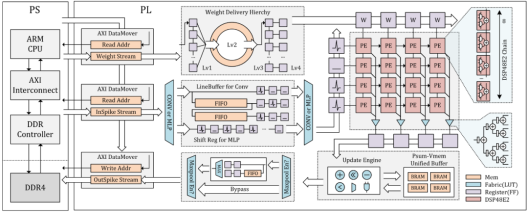

Recently, the Brain-Inspired Cognitive AI Group led by Researcher Zeng Yi at the Laboratory of Brain Atlas and Brain-Inspired Intelligence, Institute of Automation, Chinese Academy of Sciences, proposed a FPGA-based spiking neural network hardware accelerator named “BrainCog·FireFly”. This accelerator integrates DSP computation optimization strategies tailored for FPGA devices and a highly efficient memory access system for synaptic weights and membrane potentials, adapted to the spiking neural network dataflow model. It achieves hardware-level inference acceleration for spiking neural networks, pushing the technology closer to practical deployment. The related research was published in IEEE Transactions on Very Large Scale Integration (VLSI) Systems.

As algorithmic mechanisms continue to advance, the performance of brain-inspired spiking neural networks (SNNs) has steadily improved. However, hardware support for these networks has lagged behind algorithmic developments. FPGA, as a programmable hardware platform, is an ideal carrier for emerging spiking neural networks. Yet, existing FPGA-based SNN accelerators have limitations in both computation and memory efficiency.

The BrainCog·FireFly accelerator developed by the team is a high-throughput brain-inspired SNN accelerator featuring optimized computation and memory access, effectively addressing these challenges. To boost computation efficiency, the design fully leverages DSP48E2 specialized computation modules in Xilinx Ultrascale devices to perform efficient SNN computations. For storage efficiency, the design includes a dedicated memory system enabling efficient access to synaptic weights and membrane voltages.

Notably, BrainCog·FireFly is designed as an edge-oriented FPGA accelerator, capable of achieving a peak computation throughput of 5.53 TOP/s even on resource-constrained FPGA devices. Compared with existing research on systolic-array-based SNN accelerators, FireFly achieves an 8.5× improvement in computation throughput over studies using similarly scaled FPGA devices (e.g., Cerebon [TVLSI'22]), allowing it to maintain millisecond-level latency in deep spiking neural networks. As a lightweight accelerator, FireFly delivers higher computational efficiency compared to existing SNN accelerators that rely on large-scale FPGA systems.

According to the development team, BrainCog·FireFly represents a milestone result in the hardware–software co-design effort of the Brain-Inspired Cognitive Intelligence Engine “BrainCog” platform. It lays a solid foundation for future research into practical deployments of spiking neural networks in more complex real-world scenarios. Next steps will focus on further enhancing FireFly’s performance through FPGA-level optimizations, microarchitecture design, and sparse acceleration techniques, with planned real-world deployments in tasks such as autonomous visual localization and navigation for intelligent vehicles, high-speed obstacle avoidance for drones equipped with event cameras, and multi-task, multi-scene robotic exploration.

Figure 1. Overall Hardware Architecture of BrainCog·FireFly

Paper Link:

https://ieeexplore.ieee.org/abstract/document/10143752

Copyright Institute of Automation Chinese Academy of Sciences All Rights Reserved

Address: 95 Zhongguancun East Road, 100190, BEIJING, CHINA

Email:brain-ai@ia.ac.cn