Brain-Inspired Cognitive Intelligence Engine "BrainCog" Awarded Cell Press 2023 China Paper of the Year

Font:【B】 【M】 【S】

The Brain-Inspired Cognitive AI at the Institute of Automation, Chinese Academy of Sciences, led by Professor Yi Zeng, has spent over a decade developing the brain-inspired cognitive intelligence engine “BrainCog,” based on full spiking neural networks (SNNs). The goal is to provide both theoretical foundations and technical pathways for exploring artificial general intelligence.

Thanks to its scientific rigor and innovation, BrainCog was published in 2023 as a cover article in Cell Press’s journal Patterns and was named Cell Press’s 2023 China Paper of the Year. This honor recognizes BrainCog’s leading position in the field of brain-inspired intelligence and highlights its role as an open-source platform driving collaborative development across academic research, technological innovation, and industry applications.

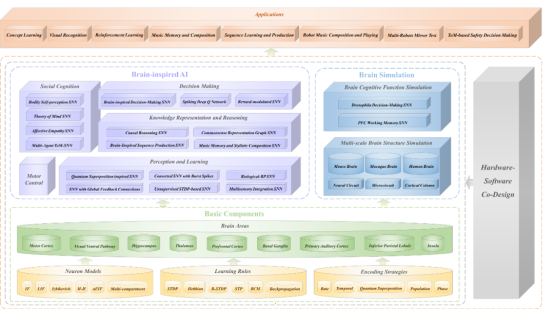

As a globally oriented open-source brain-inspired AI platform, BrainCog not only supports fundamental research and algorithm development but also offers a highly flexible toolkit for industry applications. Its components span multi-scale biologically plausible plasticity rules, diverse SNN architectures, and multimodal data interfaces, forming a full-stack solution from brain simulation to brain-inspired AI applications. BrainCog is committed to fostering global collaboration among researchers and developers. It is widely available on GitHub and the OpenI community, with over ten thousand downloads, continuing to attract attention in both academia and industry.

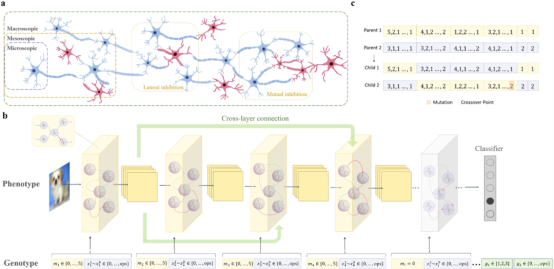

Figure 1: Computational components and applications of the BrainCog engine

Over the past year, the BrainCog team has focused on three core themes: “high intelligence,” “low power consumption,” and “hardware-software co-adaptation,” achieving multiple breakthrough results. By simulating neuronal dynamics, synaptic plasticity, and the development and evolution of neural network structures, BrainCog addresses computational challenges in complex tasks while achieving near-biological cognitive performance with extremely low energy consumption. This lays the foundation for future brain-inspired large models and promotes cross-disciplinary integration among neuromorphic computing, cognitive science, and AI technology. These results have been published in top journals and conferences including PNAS, Cell Press’s iScience, Nature’s Scientific Data, IEEE TPAMI, TEVC, TCAD, TCAS-I, TVLSI, and conferences such as IJCAI, CVPR, and NeurIPS, demonstrating the team’s innovative capabilities across disciplines.

BrainCog’s progress toward general high-level intelligence

BrainCog continues to make advances in developing general high-level intelligence, focusing on development and evolution as core themes, building new architectures and capabilities for brain-inspired SNNs to significantly improve performance in advanced cognitive tasks such as perception, learning, and decision-making.

Brain-inspired neural circuit evolution: empowering high-level decision-making and learning

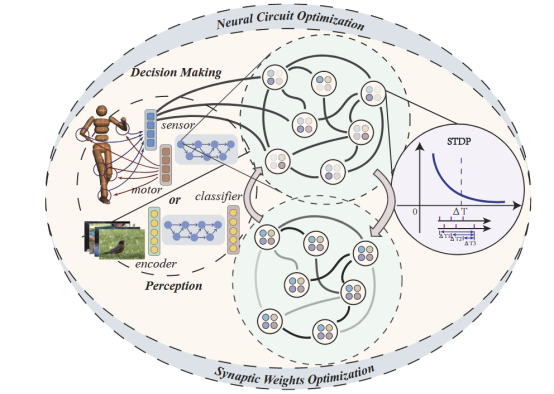

Different types of neural circuits and their adaptive capabilities in the human brain enable complex cognitive functions such as perception, learning, and decision-making. Most current SNN architectures still borrow heavily from traditional deep learning designs without fully incorporating the brain’s adaptive structural mechanisms. To address this, the BrainCog team integrates excitatory and inhibitory neurons and feedforward-feedback connections with local unsupervised learning rules and structural evolution mechanisms to generate biologically plausible circuit structures. By combining these with global error signals, the evolved brain-inspired SNNs show significantly enhanced abilities in perception, reinforcement learning, and decision-making. Related work, Brain-inspired neural circuit evolution for spiking neural networks, was published in Proceedings of the National Academy of Sciences (PNAS).

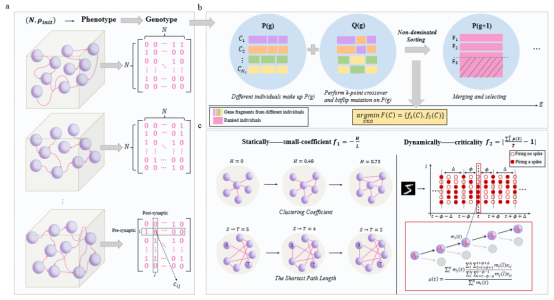

Figure 2: Brain-inspired spiking neural network constructed using neural evolution

Paper link: https://www.pnas.org/doi/abs/10.1073/pnas.2218173120

Cross-scale neural architecture search: breaking through multi-level co-evolution

The human brain’s multi-scale structural plasticity—including diverse microcircuits with excitatory/inhibitory coordination and long-range interregional connections—self-organizes into complex yet efficient systems. Inspired by this, the BrainCog team co-evolves multi-scale brain-inspired SNN structures, from microscopic neurons to mesoscopic motifs and macroscopic global connectivity. Using brain-like adaptive fitness functions, they naturally select more adaptive, generalizable, and transferable SNN architectures. Evaluated on multiple static and neuromorphic datasets, these evolved SNNs deliver higher performance, lower power consumption, stronger robustness, and better transferability. The work, Brain-inspired Multi-scale Evolutionary Neural Architecture Search for Spiking Neural Networks, has been accepted by IEEE Transactions on Evolutionary Computation (TEVC).

Figure 3: Multi-scale spiking neural network evolution

Paper link: https://arxiv.org/abs/2304.10749

Self-organized emergence of small-world networks: innovation in efficient topology

The BrainCog team leverages the brain’s efficient small-world topology, using static small-world coefficients and dynamic critical steady states as adaptive functions. Through multi-objective evolution of randomly connected reservoir SNNs, they guide the emergence of brain-like modular structures and hub nodes, improving evolution efficiency while achieving high performance and low power across tasks. Related work, Emergence of Brain-inspired Small-world Spiking Neural Network through Neuroevolution, was published in Cell Press’s iScience.

Figure 4: Multi-objective evolutionary reservoir network

Paper link: https://www.cell.com/iscience/fulltext/S2589-0042(24)00066-X

Developmental plasticity–driven adaptive pruning: enhancing network efficiency

Integrating biological brain mechanisms such as dendritic spine plasticity, local synaptic plasticity, and activity-dependent spiking traces, the team designed an adaptive pruning strategy based on the “use it or lose it” principle to remove redundant synapses and neurons. This approach explores how developmental plasticity can enable dynamic structural adjustment in neural networks, allowing them to “develop” into more efficient structures like biological brains. Their general developmental plasticity–inspired adaptive pruning method significantly improves performance and efficiency in both deep neural networks and SNNs. Related work, Developmental Plasticity-inspired Adaptive Pruning for Deep Spiking and Artificial Neural Networks, was published in IEEE Transactions on Pattern Analysis and Machine Intelligence (IEEE TPAMI).

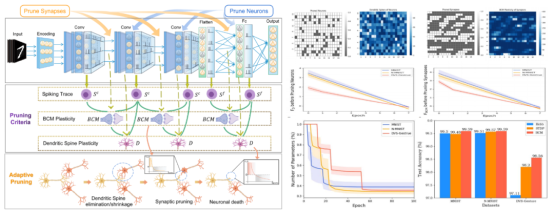

Figure 5: Developmental plasticity adaptive pruning method and process

Paper link: https://ieeexplore.ieee.org/document/10691937

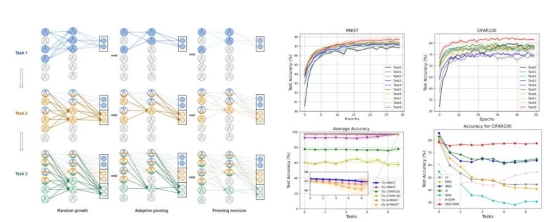

Continual learning with dynamic structural development: efficient knowledge reuse

Inspired by the brain’s dynamic expansion and pruning of neural pathways, the BrainCog team proposed a continual learning algorithm for SNNs with dynamic structural development. This model can grow, prune, and share neurons over time to reuse knowledge from previous tasks, improving continual learning performance while increasing memory capacity and reducing computation costs. Related work, Enhancing Efficient Continual Learning with Dynamic Structure Development of Spiking Neural Networks, was presented at IJCAI 2023.

Figure 6: Dynamic structure development in spiking neural networks and its performance

Paper link: https://www.ijcai.org/proceedings/2023/334

BrainCog’s progress toward low power consumption

BrainCog is advancing toward high-efficiency, energy-saving cognitive intelligence by developing new datasets and optimization algorithms.

N-Omniglot: a neuromorphic dataset for few-shot learning

Deep learning’s success relies on datasets like ImageNet and COCO, but brain-inspired AI, especially SNNs, lacks suitable datasets. DVS sensors mimic the human visual system by providing rich temporal information, but existing datasets like N-MNIST and DVS-Gesture have low temporal correlation, limiting SNN potential. To address this, the BrainCog team developed N-Omniglot, the first neuromorphic dataset designed for few-shot learning. Related work, N-Omniglot, a large-scale neuromorphic dataset for spatio-temporal sparse few-shot learning, was published in Nature’s Scientific Data.

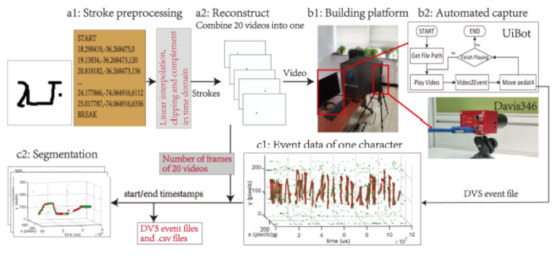

Figure 7: Full process of generating the N-Omniglot dataset

Paper link: https://www.nature.com/articles/s41597-022-01851-z

Bullying10K: A Privacy-Preserving Behavior Recognition Dataset

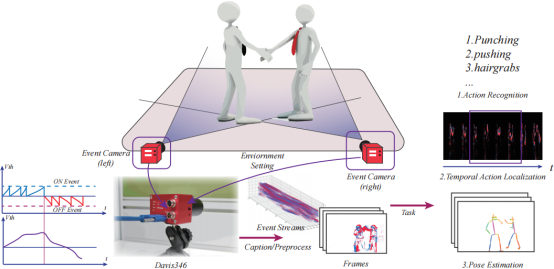

Public surveillance cameras have played a positive role in reducing violent incidents but have also raised privacy concerns. To address this, the BrainCog team leveraged the characteristics of DVS cameras to create the Bullying10K neuromorphic dataset. The dataset contains 10,000 event clips totaling 12 billion events and 255GB of data. Unlike conventional cameras, DVS cameras generate event streams by detecting pixel-level brightness changes, making content recognition more difficult. Bullying10K emphasizes capturing complex, fast, and potentially occluded actions while preserving participant privacy. This approach enables violence detection while ensuring privacy protection. The team hopes this dataset will provide valuable data for future research in the field and open up new opportunities for data protection methods. Related work, Bullying10K: a large-scale neuromorphic dataset towards privacy-preserving bullying recognition, was published at Advances in Neural Information Processing Systems 2024 (NeurIPS 2024).

Figure 8: Bullying10K recording process and benchmark tasks

A New Perspective on Network Quantization and Energy Efficiency Optimization

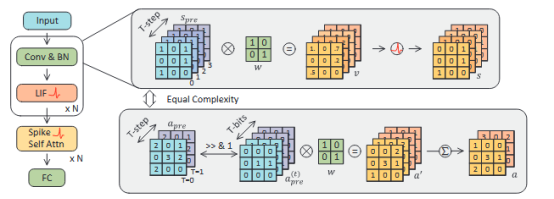

The BrainCog team re-examines the energy efficiency advantage of SNNs from the perspective of network quantization, exploring how to fairly compare them with quantized artificial neural networks (ANNs). They propose a unified perspective that draws an analogy between time steps in SNNs and activation bit widths in ANNs, and introduce a more practical, reasonable method for estimating SNN energy consumption. Unlike traditional SynOps metrics, they introduce the concept of a “Bit Budget” to explore how to reasonably allocate computation and storage resources among weights, activations, and time steps under strict hardware constraints. Guided by this bit budget, the study shows that focusing on spike patterns and weight quantization, rather than merely reducing time steps, has a more profound impact on model performance. Optimizing SNN design under the bit budget framework significantly improves performance on static image and neuromorphic datasets, bridging the theoretical gap between SNNs and quantized ANNs and offering a practical path to achieving higher energy efficiency in neural computing. Related work, Are Conventional SNNs Really Efficient? A Perspective from Network Quantization, was published at IEEE/CVF Conference on Computer Vision and Pattern Recognition 2024 (CVPR24).

Figure 9: Under the same feature bit budget, SNNs and quantized ANNs have equivalent representational complexity.

Paper link: https://ieeexplore.ieee.org/document/10656053/

TIM: An Efficient Temporal Interaction Module for Enhancing Sequential Modeling

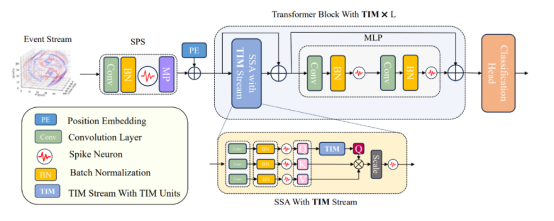

While Spiking Transformers have achieved strong performance on image tasks, relying solely on LIF neuron membrane potentials struggles to model temporal information effectively. To address this, the BrainCog team designed the Temporal Interaction Module (TIM) to enhance models’ ability to extract and analyze temporal representations. TIM is highly parameter-efficient, retaining SNNs’ low energy and high efficiency while significantly improving Spiking Transformers’ ability to model temporal information. Related work, TIM: An Efficient Temporal Interaction Module for Spiking Transformer, was presented at International Joint Conference on Artificial Intelligence 2024 (IJCAI2024).

Figure 10: TIM framework schematic

Paper link: https://www.ijcai.org/proceedings/2024/0347.pdf

BrainCog FireFly Series: Brain-Inspired Intelligent Hardware Innovation with Software-Hardware Co-Design

“BrainCog FireFly” is a high-throughput brain-inspired SNN accelerator with optimized computation and memory access, deeply integrated with the BrainCog platform for software-hardware co-design. It supports low-power, high-performance, and intelligent SNN applications for edge computing scenarios. Continuous development of the “BrainCog FireFly” series strengthens confidence in exploring the full potential of software-hardware co-designed brain-inspired AI. The team firmly believes that achieving low power, high performance, and high intelligence integration on relatively small-scale co-designed platforms represents the true future of AI.

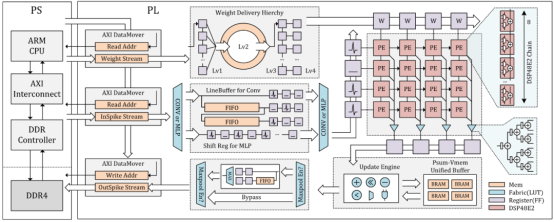

BrainCog FireFly

BrainCog FireFly is a high-throughput SNN accelerator with synaptic computation and memory hierarchy optimization. It leverages dedicated DSP48E2 modules in Xilinx Ultrascale devices for efficient SNN computation and features a memory system for optimized access to synaptic weights and membrane potentials. As a lightweight accelerator, FireFly achieves higher computational efficiency than existing large FPGA-based SNN accelerators, reaching 5.53 TOP/s throughput. Related work, FireFly: A High-Throughput Hardware Accelerator for Spiking Neural Networks With Efficient DSP and Memory Optimization, was published in IEEE Transactions on Very Large Scale Integration (VLSI) Systems.

Figure 11: FireFly V1 schematic

Paper link: https://ieeexplore.ieee.org/abstract/document/10143752

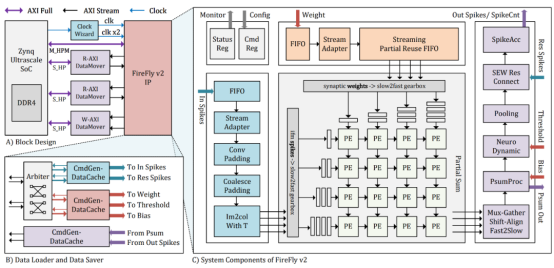

BrainCog FireFly-v2

BrainCog FireFly-v2 is an advanced brain-inspired SNN accelerator that evolves alongside algorithms. It introduces bit-decomposition mechanisms to handle multi-bit spike-unfriendly operations from a hardware perspective. The design optimizes the dataflow model, resolving timing dependencies to eliminate expensive on-chip membrane potential storage. By introducing double clock frequency technology, it doubles the operation unit frequency, achieving 40% higher utilization compared to existing FPGA-based SNN accelerators like DeepFire2, with significant performance improvements over the first generation. Related work, FireFly v2: Advancing Hardware Support for High-Performance Spiking Neural Network with a Spatiotemporal FPGA Accelerator, was published in IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems (TCAD).

Figure 12: FireFly V2 schematic

Paper link: https://ieeexplore.ieee.org/abstract/document/10478105

BrainCog FireFly-S

BrainCog FireFly-S introduces dual-side sparsity acceleration with software-hardware co-design. At the algorithm level, it innovates a hardware-friendly learning rate quantization strategy tailored to SNN neuron characteristics, combined with a gradient rewiring-based pruning mechanism to produce highly compressed models with sparsity in both weights and neuron spikes. At the hardware level, it optimizes spatial architecture dataflow and introduces dual-side sparsity acceleration units to reduce inter-layer pipeline stalls and eliminate redundant zero-value computations, improving inference speed. Compared to earlier dense-computation FireFly versions, FireFly-S achieves 6x–20x better energy efficiency and nearly 2x efficiency compared to single-side sparse accelerators like SyncNN on smaller hardware platforms. Related work, FireFly-S: Exploiting Dual-Side Sparsity for Spiking Neural Networks Acceleration With Reconfigurable Spatial Architecture, was published in IEEE Transactions on Circuits and Systems I: Regular Papers (TCAS-I).

Figure 13: FireFly S schematic

Paper link: https://ieeexplore.ieee.org/document/10754657

Copyright Institute of Automation Chinese Academy of Sciences All Rights Reserved

Address: 95 Zhongguancun East Road, 100190, BEIJING, CHINA

Email:brain-ai@ia.ac.cn