The Laboratory of Brain Atlas and Brain-Inspired Intelligence Achieves New Progress in Multimodal Neural Information Encoding and Decoding

Font:【B】 【M】 【S】

Decoding human visual neural representations is a scientifically significant challenge that can reveal the mechanisms of visual processing and promote the development of both neuroscience and artificial intelligence. However, current neural decoding methods struggle to generalize to new categories outside the training data. A key challenge is that existing methods do not fully leverage the multimodal semantic knowledge underlying neural data, and there is very limited paired (stimulus–brain response) training data available.

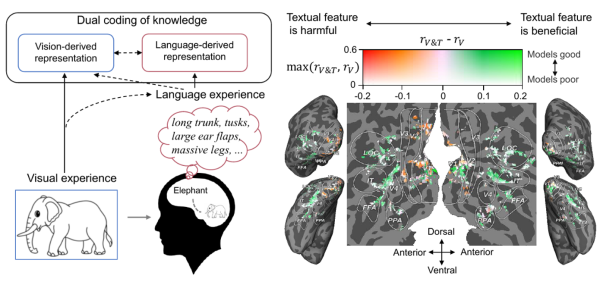

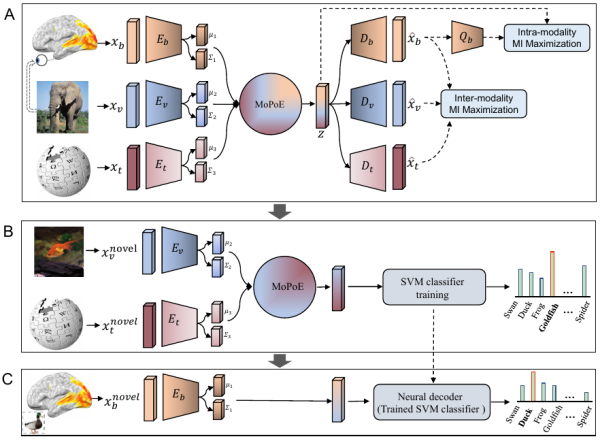

Recently, the Laboratory of Brain Atlas and Brain-Inspired Intelligence of the Institute of Automation, Chinese Academy of Sciences, combined brain, visual, and linguistic knowledge through multimodal learning to achieve zero-shot decoding of new visual categories from human brain activity. The related research, titled Decoding Visual Neural Representations by Multimodal Learning of Brain-Visual-Linguistic Features, was published in IEEE Transactions on Pattern Analysis and Machine Intelligence (IEEE TPAMI). Human perception and recognition of visual stimuli are influenced by both visual features and prior experience; for example, when seeing a familiar object, the brain naturally retrieves knowledge related to that object, as shown in Figure 1. Based on this, the study proposed a “Brain-Visual-Linguistic” (BraVL) trimodal joint learning framework that incorporates richer linguistic semantic features related to visual target objects while using the actual presented visual semantic features, to better decode brain signals.

The study demonstrated that decoding new visual categories from human brain activity is feasible and can achieve high accuracy. It also showed that using a combination of visual and linguistic features outperformed using either one alone, and that visual processing in the brain is influenced by language during semantic representation.

These findings not only provide insights into the understanding of the human visual system but also offer new ideas for brain–computer interface (BCI) technologies. According to the research team, this work has three potential applications: as a neural semantic decoding tool, it can play an important role in developing new neural prosthetic devices capable of reading semantic information from the brain, providing a technical foundation for such devices; as a neural encoding tool, it can be used to study how visual and linguistic features are represented in the human cerebral cortex by inferring brain activity across modalities, revealing which brain regions exhibit multimodal properties (i.e., are sensitive to both visual and linguistic features); as a brain-like evaluation tool, it can test which models’ (visual or linguistic) representations are closer to human brain activity, thereby motivating researchers to design more brain-inspired computational models.

Figure 1. Dual encoding of knowledge in the human brain. When we see a picture of an elephant, we naturally retrieve related knowledge in our minds (such as its long trunk, tusks, and large ears). At this moment, the concept of the elephant is encoded in the brain both visually and linguistically, with language serving as an effective form of prior experience that helps shape the representations produced by vision.

Figure 2. The proposed “Brain-Visual-Linguistic” trimodal joint learning framework, abbreviated as BraVL.

The first author of this paper is Dr. Changde Du, Special Research Assistant at the Chinese Academy of Sciences, and the corresponding author is Dr. Huiguang He. The research was supported by the Ministry of Science and Technology’s Science and Technology Innovation 2030—“New Generation Artificial Intelligence” Major Project, NSFC projects, the 2035 Innovation Task of the Institute of Automation, Chinese Academy of Sciences, as well as the CAAI-Huawei MindSpore Academic Award Fund and the Intelligent Base program. To promote continued development in this field, the research team has open-sourced the code and the newly collected trimodal dataset.

Paper link: https://ieeexplore.ieee.org/document/10089190

Code link: https://github.com/ChangdeDu/BraVL

Data link: https://figshare.com/articles/dataset/BraVL/17024591

Copyright Institute of Automation Chinese Academy of Sciences All Rights Reserved

Address: 95 Zhongguancun East Road, 100190, BEIJING, CHINA

Email:brain-ai@ia.ac.cn