Laboratory of Brain Atlas and Brain-Inspired Intelligence and Xi'an Jiaotong University Propose Attention Spiking Neural Network

Font:【B】 【M】 【S】

Recently, Researcher Guoqi Li from the Laboratory of Brain Atlas and Brain-Inspired Intelligence at the Institute of Automation, Chinese Academy of Sciences, in collaboration with Professor Guangshe Zhao from Xi'an Jiaotong University, published a paper titled Attention Spiking Neural Networks in the top-tier AI journal IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI). This work integrates attention mechanisms into million-scale spiking neural networks, achieving—for the first time—performance on the ImageNet-1K dataset comparable to traditional artificial neural networks (ANNs), with theoretical energy efficiency 31.8 times higher than ANN of equivalent structure. This approach significantly improves task performance while greatly reducing energy consumption, providing new insights for developing low-power neuromorphic systems.

Traditional ANNs have in recent years demonstrated human-level or even superhuman ability on certain tasks. These successes, however, have come with enormous energy costs, while the human brain completes similar or even more complex tasks with extremely low energy consumption. Making machine intelligence work as efficiently as the brain remains a central goal for researchers. Neuromorphic computing based on spiking neural networks (SNNs) offers a promising low-power alternative to conventional AI. Spiking neurons simulate the complex spatiotemporal dynamics of biological neurons and theoretically have greater expressive power than existing artificial neurons. They also inherit spike-based communication, which is key to the low-power nature of SNNs. On one hand, neuromorphic systems only need to perform low-energy synaptic additions; on the other, their event-driven nature ensures computation is triggered only when neurons fire. Therefore, achieving high task performance with low firing rates is a crucial problem in neuromorphic computing. The human brain naturally and effectively identifies important information in complex scenes—a process known as the attention mechanism. While widely used in deep learning with great success, applying attention mechanisms in neuromorphic computing remains highly challenging.

There are three fundamental issues to address when incorporating attention into SNNs. First, the key to SNN energy efficiency is their event-driven spike-based communication, which attention mechanisms must not disrupt. Second, SNNs are used in diverse application scenarios, requiring flexible designs to ensure effectiveness across settings. Third, binary spike communication can lead to gradient vanishing or explosion, causing performance degradation in deep SNNs; any attention mechanism added should at least not worsen this problem.

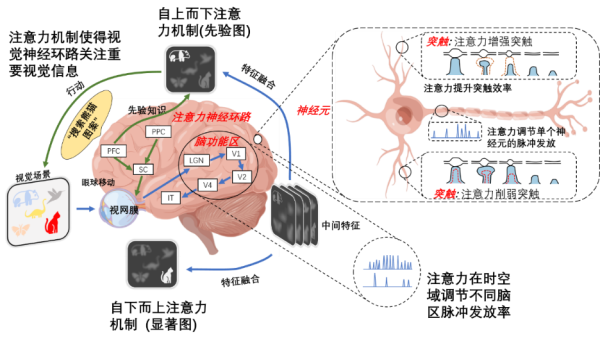

Figure 1. The brain features attention mechanisms across hierarchical structures.

As shown in Figure 1, attention in the brain primarily works by regulating neural spiking across different regions and neurons. Inspired by this, the study uses attention mechanisms to optimize membrane potential distributions within SNNs, enhancing important features while suppressing unnecessary ones to regulate spike firing. The network architecture is shown in Figure 2.

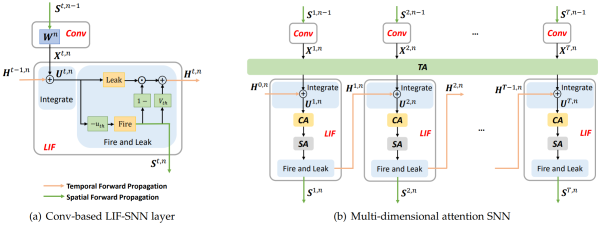

Figure 2. Multi-dimensional attention SNN where attention regulates membrane potential distribution.

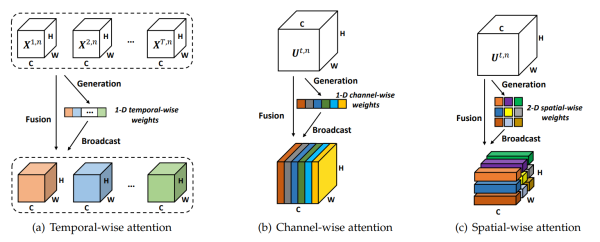

Furthermore, to adapt the attention SNN to various applications, the study combines temporal, channel, and spatial dimensions to learn "when," "what," and "where" is important, as illustrated in Figure 3.

Figure 3. Illustration of temporal, channel, and spatial attention dimensions.

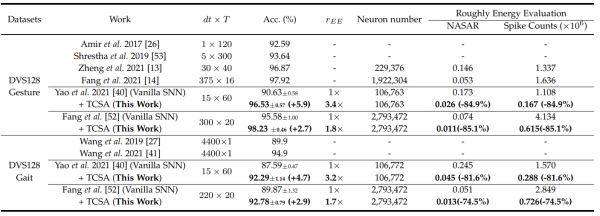

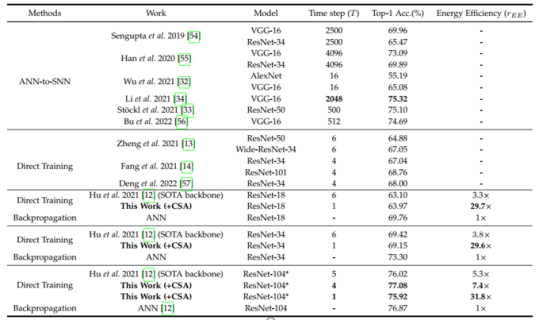

The research team conducted experiments on both event-based action recognition datasets and the static image classification dataset ImageNet-1K. Results show that adding the attention module not only significantly improves SNN performance but also drastically reduces spike counts, lowering energy consumption. On the DVS128 Gait dataset, the multi-dimensional attention module reduced spike firing by 81.6% while delivering a 4.7% performance boost (Table 1). On ImageNet-1K, the attention SNN achieved ANN-comparable performance for the first time, with theoretical energy efficiency 31.8 times higher than an equivalent ANN (Table 2).

Table 1. Performance comparison on DVS128 Gesture/Gait.

Table 2. Performance comparison on ImageNet-1K.

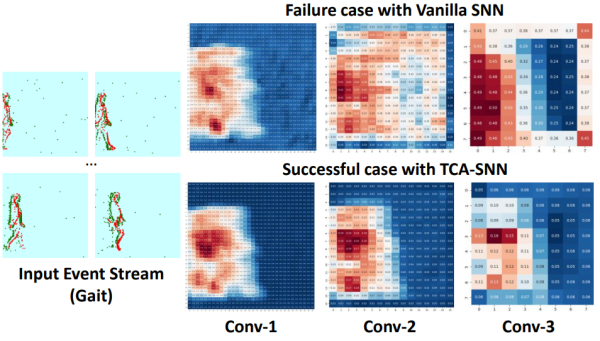

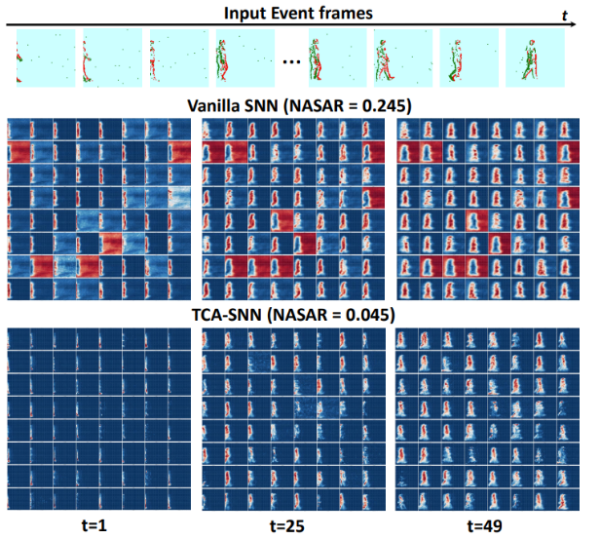

The study also proposed a new visualization method to analyze why the attention module improves performance while reducing spikes. As shown in Figures 4 and 5, SNNs with attention focus on important information while suppressing irrelevant background noise (each pixel in the feature maps represents a neuron's firing rate—redder indicates higher firing, bluer indicates lower). In the original feature maps, noise features and neurons have high firing rates. Therefore, suppressing noise significantly reduces overall spikes.

Figure 4. Case study on the Gait dataset.

Figure 5. Spike responses in the DVS128 Gait dataset. Attention significantly suppresses background noise.

Additionally, the study used block-dynamic isometry theory to prove that inserting the proposed attention module into deep SNNs preserves dynamic isometry—meaning it does not introduce performance degradation.

In summary, this work explored how to use attention in SNNs, finding that incorporating it as an auxiliary module can significantly boost task performance while greatly reducing spike firing. Visualization of responses in the original versus attention-equipped SNNs shows that attention helps focus on important information while suppressing noise, which contains many spikes. As a result, neuromorphic computing with SNNs can achieve brain-like efficiency with lower energy consumption while maintaining or even improving performance.

First author of the paper is Xi'an Jiaotong University PhD student Man Yao. Corresponding author is Researcher Guoqi Li from the Institute of Automation, Chinese Academy of Sciences. Co-authors include Researcher Bo Xu from the Institute of Automation, Professor Guangshe Zhao from Xi'an Jiaotong University, Professor Yonghong Tian from Peking University, and graduate students Hengyu Zhang, Yifan Hu, and Assistant Professor Lei Deng from Tsinghua University. This work was supported by the Beijing Outstanding Young Scientist Fund, National Natural Science Foundation of China Key Project, and Regional Innovation Joint Key Project.

Paper link:

https://ieeexplore.ieee.org/document/10032591

Code for this paper has been open-sourced in the SpikingJelly framework:

https://github.com/fangwei123456/spikingjelly/pull/329

Copyright Institute of Automation Chinese Academy of Sciences All Rights Reserved

Address: 95 Zhongguancun East Road, 100190, BEIJING, CHINA

Email:brain-ai@ia.ac.cn