N-Omniglot: A Large-Scale Neuromorphic Dataset for Spatio-Temporal Sparse Few-Shot Learning

Font:【B】 【M】 【S】

The Brain-Inspired Cognitive AI Research Group at Brain-AI Lab has published a paper titled N-Omniglot, a large-scale neuromorphic dataset for spatio-temporal sparse few-shot learning in the Nature journal Scientific Data. The work introduces N-Omniglot, a large-scale neuromorphic dataset designed for spatio-temporally sparse few-shot learning, providing a more challenging benchmark for training and evaluating spiking neural networks (SNNs).

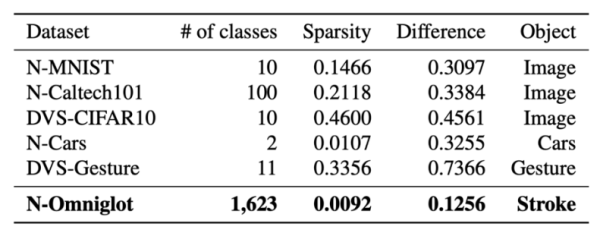

Much of deep learning's success relies on the availability of large datasets like ImageNet and COCO. However, existing datasets are not well-suited for brain-inspired AI—particularly for SNN development. Although several neuromorphic datasets have been created using dynamic vision sensors (DVS) to promote SNN research, these datasets often have low temporal correlation and fail to reflect the rich time-based information processing capabilities inherent to biological systems. Besides energy-efficient sparse coding, the human brain is also remarkable at learning new concepts quickly from just a few examples—yet this remains an open challenge in SNN-based machine learning.

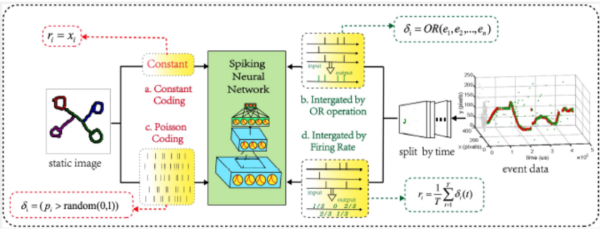

To address these limitations, the team introduced N-Omniglot as the first neuromorphic dataset tailored for few-shot learning. The original Omniglot dataset is a widely used benchmark in this domain, consisting of 1623 handwritten characters from 50 languages, each with only 20 examples. Traditionally viewed as static images, Omniglot ignores the rich temporal information in the act of writing. According to first author PhD student Yang Li, SNNs remain in their infancy for neuromorphic few-shot learning. Although such a dataset has been built, there has been little algorithmic development to support this task. To highlight differences between N-Omniglot and encoded Omniglot, all experiments were conducted on both. As shown in the figure, the team used Poisson and constant encoding for static images. Since DVS cameras offer high temporal resolution, overly long time axes can be computationally burdensome for current clock-driven SNN algorithms. The team therefore processed event data using logical OR operations and spike-rate thresholds.

Figure: Encoding of static images and event data preprocessing

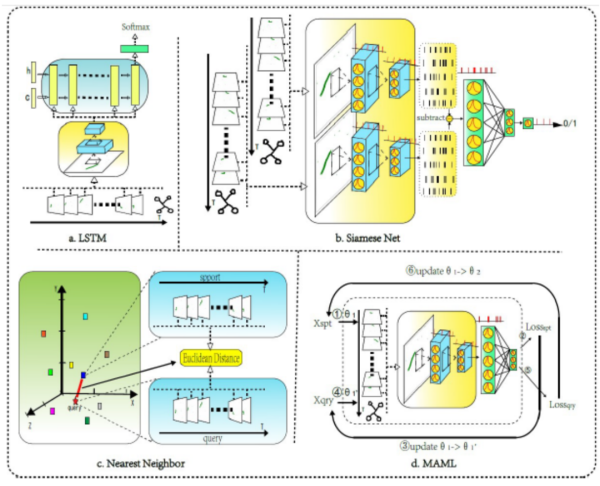

Co-author PhD student Yiting Dong explained that to demonstrate N-Omniglot's utility and its potential to introduce new challenges for SNN algorithms, the study benchmarked four types of SNN algorithms, including adaptations of two classic general-purpose classification methods and two few-shot learning methods.

Figure: Four few-shot learning baselines tested on N-Omniglot

The nearest neighbor approach can evaluate sample separability and serve as a benchmark for other methods. For event data, the team adapted nearest neighbor classification to compare new inputs to every sample in the training set using a chosen distance metric (Euclidean distance). For directly encoded static images, the team avoided unnecessary computation by comparing raw images, while for other encodings they calculated distances across synthetic frames at each timestep.

Few-shot learning challenges arise from many classes and few examples per class. Even so, such tasks can be treated as standard classification problems with general-purpose classifiers. The team built a spiking convolutional neural network with leaky integrate-and-fire (LIF) neurons for this purpose, consisting of two convolutional layers and two fully connected layers, each followed by a stride-2 average pooling layer. Due to the non-differentiable nature of SNNs, they used surrogate gradients for training. Despite not being specifically designed for few-shot learning, this approach still achieved reasonable results. As a comparison, they also implemented an ANN model (shown in Figure a) that uses convolutional layers as feature extractors connected to Long Short-Term Memory (LSTM) units to integrate temporal information.

Siamese Networks are classic metric-based few-shot learning methods. Since standard Siamese Networks cannot handle neuromorphic data, the team adapted them with LIF-based SNN backbones to add temporal processing capability. Siamese Networks take two inputs, label pairs as 1 if they belong to the same class and 0 otherwise, and learn to compare features. As shown in Figure b, the network’s first half is shared between inputs, with the difference of their feature maps fed to a final fully connected layer. During testing, each query is compared to all support samples, and the class with the highest predicted probability is selected.

Model-Agnostic Meta-Learning (MAML) is a classic optimization-based few-shot learning algorithm. For the same reasons, the team adapted it with LIF-based SNN backbones to handle neuromorphic data. MAML aims to train networks that can rapidly adapt with a few iterations. For each training batch, fixed numbers of classes and samples are sampled, inputs are fed through the network, weights are copied and updated over a few steps on the support set, and errors are back-propagated using the query set. During testing, the support set is used for adaptation before evaluation.

Assistant Researcher Dongcheng Zhao noted that results showed all four methods performed worse on N-Omniglot than on standard Omniglot. For example, the Siamese Net’s accuracy dropped from 75.3% to 54.0% for a 20-way 1-shot task. This is partly because the new dataset is more spatially sparse and its temporal similarity is lower than that of static or Poisson-encoded images, posing new challenges for SNN learning. Another issue is the lack of preprocessing techniques specifically tailored for neuromorphic datasets.

Notably, the team also tested the two classic few-shot methods at different simulation times, finding that longer simulation reduced accuracy. This is because longer simulations divide events into more frames, making it harder to maintain frame-to-frame connections. This indicates that descriptors are crucial for improving SNNs’ ability to capture important spatiotemporal features. Thus, N-Omniglot is an effective, robust, and challenging neuromorphic benchmark for developing future SNNs.

This research is part of the team's long-term Brain-Inspired Cognitive Intelligence Engine (BrainCog) project. Corresponding author Researcher Yi Zeng commented: "This progress can be seen as a brick in the foundation of a towering structure. It provides a benchmark for SNNs in few-shot learning and new challenges for efficient training methods. More importantly, our team is now better prepared to continue developing the theoretical frameworks, data engines, and computing infrastructure needed to build brain-inspired AI systems that approach human-level cognitive intelligence."

Original article:

https://www.nature.com/articles/s41597-022-01851-z

Dataset page:

http://www.brain-cog.network/dataset/N-Omniglot/

Code:

https://github.com/Brain-Cog-Lab/N-Omniglot

BrainCog AI Engine (open source):

http://www.brain-cog.network

Copyright Institute of Automation Chinese Academy of Sciences All Rights Reserved

Address: 95 Zhongguancun East Road, 100190, BEIJING, CHINA

Email:brain-ai@ia.ac.cn