Research Reveals the Emergence of Human-Like Object Concept Representations in Multimodal Large Models

Font:【B】 【M】 【S】

Humans have the remarkable ability to conceptualize objects in the natural world—a cognitive capability long regarded as central to human intelligence. When we see a “dog,” “car,” or “apple,” we not only recognize their physical features (size, color, shape) but also understand their functions, emotional value, and cultural significance. This multidimensional conceptual representation forms the foundation of human cognition. With the explosive growth of large language models (LLMs) such as ChatGPT, a fundamental question has emerged: can these models develop human-like object concept representations from language and multimodal data?

Recently, the Neural Computation and Brain-computer Interaction team at the Laboratory of Brain Atlas and Brain-Inspired Intelligence, Institute of Automation, Chinese Academy of Sciences, together with collaborators from the Center for Excellence in Brain Science and Intelligence Technology, combined behavioral experiments with neuroimaging analysis to provide the first evidence that multimodal large language models (MLLMs) can spontaneously form object concept representation systems highly similar to those of humans. This research not only opens new paths in AI cognitive science but also offers a theoretical framework for building artificial intelligence systems with human-like cognitive structures. The study, titled Human-like object concept representations emerge naturally in multimodal large language models, was published in Nature Machine Intelligence.

Traditional AI research has focused on object recognition accuracy but has rarely explored whether models truly “understand” the meaning of objects. Corresponding author Dr. Huiguang He noted: “Current AI can distinguish images of cats and dogs,but there remains an essential difference between such ‘recognition’ and human-level ‘understanding’ of cats and dogs.”

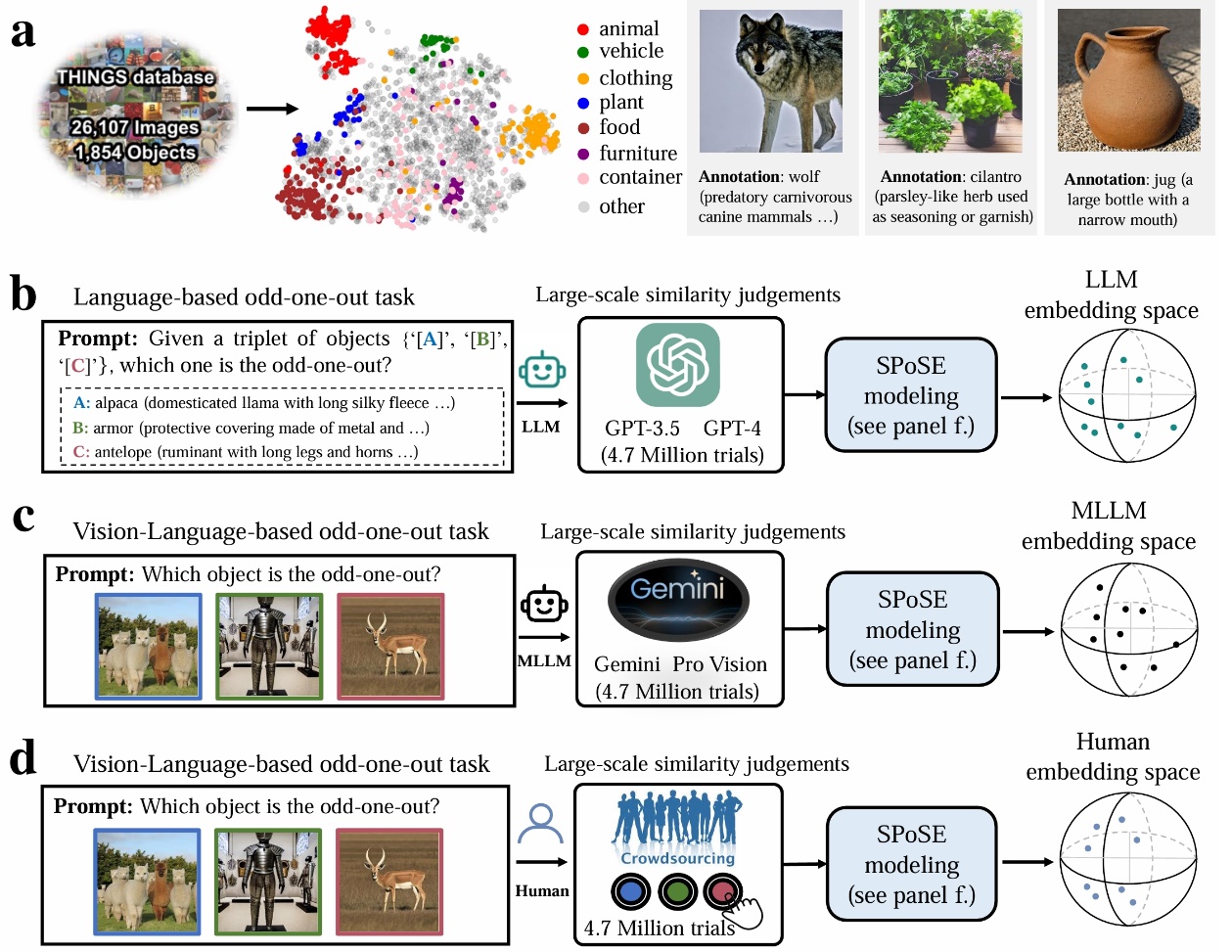

Starting from classic theories in cognitive neuroscience, the team designed an innovative paradigm that integrates computational modeling, behavioral experiments, and brain science. Using the well-established “triplet odd-one-out” task from cognitive psychology, both the models and human participants were asked to select the least similar option from triplets of concepts randomly drawn from 1,854 everyday categories. By analyzing 4.7 million behavioral judgment records, the team constructed the first-ever “concept map” of large AI models.

Illustration of the experimental paradigm.

a. Example of the object concept set and images with language descriptions.

b-d. Behavioral experiment paradigms and conceptual embedding spaces for LLMs,MLLMs,and humans.

From the massive behavioral data of large models,researchers extracted 66 “mental dimensions” and assigned them semantic labels. Surprisingly,these dimensions were highly interpretable and showed significant correlations with neural activity patterns in brain regions specialized for categories—such as the fusiform face area (FFA) for faces,parahippocampal place area (PPA) for scenes,and extrastriate body area (EBA) for bodies.

The study also compared models’ human consistency in behavioral choice patterns, finding that multimodal large models (e.g., Gemini_Pro_Vision, Qwen2_VL) achieved higher human consistency. Moreover, the research revealed that humans tend to combine visual features and semantic information in decision-making, whereas large models lean more on semantic labels and abstract concepts. This study shows that large language models are not mere “stochastic parrots,” but contain internal representations resembling human understanding of real-world concepts.

Associate Researcher Changde Du of the Institute of Automation is the first author, and Dr. Huiguang He is the corresponding author. Other main contributors include Dr. Le Chang from the Center for Excellence in Brain Science and Intelligence Technology. The study was supported by the CAS Frontier Science Key Research Program, the National Natural Science Foundation of China, the Beijing Natural Science Foundation, and the National Key Laboratory for Brain Cognition and Brain-Inspired Intelligence.

Changde Du,Kaicheng Fu,Bincheng Wen,Yi Sun,Jie Peng,Wei Wei,Ying Gao,Shengpei Wang,Chuncheng Zhang,Jinpeng Li,Shuang Qiu,Le Chang,Huiguang He. Human-like object concept representations emerge naturally in multimodal large language models. Nature Machine Intelligence (2025).

DOI: 10.1038/s42256-025-01049-z

Full text link:https://www.nature.com/articles/s42256-025-01049-z

Code:https://github.com/ChangdeDu/LLMs_core_dimensions

Dataset:https://osf.io/qn5uv/

Copyright Institute of Automation Chinese Academy of Sciences All Rights Reserved

Address: 95 Zhongguancun East Road, 100190, BEIJING, CHINA

Email:brain-ai@ia.ac.cn